992 lines

131 KiB

Plaintext

992 lines

131 KiB

Plaintext

|

|

{

|

|||

|

|

"cells": [

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "XMMppWbnG3dN"

|

|||

|

|

},

|

|||

|

|

"source": [

|

|||

|

|

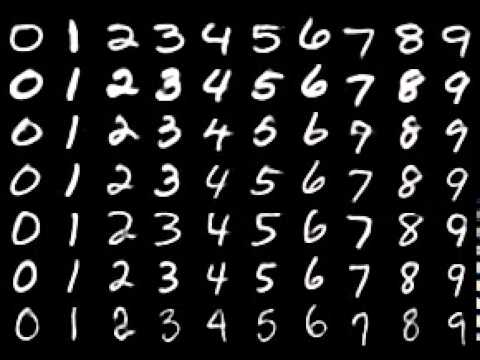

"## Auto-encodeurs\n",

|

|||

|

|

"\n",

|

|||

|

|

"L'objectif de ce TP est de manipuler des auto-encodeurs sur un exemple simple : la base de données MNIST. L'idée est de pouvoir visualiser les concepts vus en cours, et notamment d'illustrer la notion d'espace latent.\n",

|

|||

|

|

"\n",

|

|||

|

|

"Pour rappel, vous avez déjà manipulé les données MNIST en Analyse de Données en première année. Les images MNIST sont des images en niveaux de gris, de taille 28x28 pixels, représentant des chiffres manuscrits de 0 à 9.\n",

|

|||

|

|

"\n",

|

|||

|

|

"\n",

|

|||

|

|

"\n",

|

|||

|

|

"Pour démarrer, nous vous fournissons un code permettant de créer un premier auto-encodeur simple, de charger les données MNIST et d'entraîner cet auto-encodeur. **L'autoencodeur n'est pas convolutif !** (libre à vous de le transformer pour qu'il le soit, plus tard dans le TP)"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 13,

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "nndrdDrlSkho"

|

|||

|

|

},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"name": "stdout",

|

|||

|

|

"output_type": "stream",

|

|||

|

|

"text": [

|

|||

|

|

"Model: \"model_11\"\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

" Layer (type) Output Shape Param # \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

" input_7 (InputLayer) [(None, 784)] 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" model_9 (Functional) (None, 32) 104608 \n",

|

|||

|

|

" \n",

|

|||

|

|

" model_10 (Functional) (None, 784) 105360 \n",

|

|||

|

|

" \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

"Total params: 209,968\n",

|

|||

|

|

"Trainable params: 209,968\n",

|

|||

|

|

"Non-trainable params: 0\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

"Model: \"model_9\"\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

" Layer (type) Output Shape Param # \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

" input_7 (InputLayer) [(None, 784)] 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" dense_12 (Dense) (None, 128) 100480 \n",

|

|||

|

|

" \n",

|

|||

|

|

" dense_13 (Dense) (None, 32) 4128 \n",

|

|||

|

|

" \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

"Total params: 104,608\n",

|

|||

|

|

"Trainable params: 104,608\n",

|

|||

|

|

"Non-trainable params: 0\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

"None\n",

|

|||

|

|

"Model: \"model_10\"\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

" Layer (type) Output Shape Param # \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

" input_8 (InputLayer) [(None, 32)] 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" dense_14 (Dense) (None, 128) 4224 \n",

|

|||

|

|

" \n",

|

|||

|

|

" dense_15 (Dense) (None, 784) 101136 \n",

|

|||

|

|

" \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

"Total params: 105,360\n",

|

|||

|

|

"Trainable params: 105,360\n",

|

|||

|

|

"Non-trainable params: 0\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

"None\n"

|

|||

|

|

]

|

|||

|

|

}

|

|||

|

|

],

|

|||

|

|

"source": [

|

|||

|

|

"from keras.layers import Input, Dense\n",

|

|||

|

|

"from keras.models import Model\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Dimension de l'entrée\n",

|

|||

|

|

"input_img = Input(shape=(784,))\n",

|

|||

|

|

"# Dimension de l'espace latent : PARAMETRE A TESTER !!\n",

|

|||

|

|

"latent_dim = 32\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Définition d'un encodeur\n",

|

|||

|

|

"x = Dense(128, activation='relu')(input_img)\n",

|

|||

|

|

"encoded = Dense(latent_dim, activation='linear')(x)\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Définition d'un decodeur\n",

|

|||

|

|

"decoder_input = Input(shape=(latent_dim,))\n",

|

|||

|

|

"x = Dense(128, activation='relu')(decoder_input)\n",

|

|||

|

|

"decoded = Dense(784, activation='sigmoid')(x)\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Construction d'un modèle séparé pour pouvoir accéder aux décodeur et encodeur\n",

|

|||

|

|

"encoder = Model(input_img, encoded)\n",

|

|||

|

|

"decoder = Model(decoder_input, decoded)\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Construction du modèle de l'auto-encodeur\n",

|

|||

|

|

"encoded = encoder(input_img)\n",

|

|||

|

|

"decoded = decoder(encoded)\n",

|

|||

|

|

"autoencoder = Model(input_img, decoded)\n",

|

|||

|

|

"\n",

|

|||

|

|

"autoencoder.compile(optimizer='Adam', loss='SparseCategoricalCrossentropy')\n",

|

|||

|

|

"autoencoder.summary()\n",

|

|||

|

|

"print(encoder.summary())\n",

|

|||

|

|

"print(decoder.summary())"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 22,

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "jwYr0GHCSlIq"

|

|||

|

|

},

|

|||

|

|

"outputs": [],

|

|||

|

|

"source": [

|

|||

|

|

"from keras.datasets import mnist\n",

|

|||

|

|

"import numpy as np\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Chargement et normalisation (entre 0 et 1) des données de la base de données MNIST\n",

|

|||

|

|

"(x_train, _), (x_test, _) = mnist.load_data()\n",

|

|||

|

|

"\n",

|

|||

|

|

"x_train = x_train.astype('float32') / 255.\n",

|

|||

|

|

"x_test = x_test.astype('float32') / 255.\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Vectorisation des images d'entrée en vecteurs de dimension 784\n",

|

|||

|

|

"x_train = np.reshape(x_train, (len(x_train), 784))\n",

|

|||

|

|

"x_test = np.reshape(x_test, (len(x_test), 784))"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 23,

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "eKPiA41bSvxC"

|

|||

|

|

},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"name": "stdout",

|

|||

|

|

"output_type": "stream",

|

|||

|

|

"text": [

|

|||

|

|

"Epoch 1/50\n",

|

|||

|

|

"469/469 [==============================] - 3s 6ms/step - loss: 0.0784 - val_loss: 0.0781\n",

|

|||

|

|

"Epoch 2/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0783 - val_loss: 0.0780\n",

|

|||

|

|

"Epoch 3/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0783 - val_loss: 0.0781\n",

|

|||

|

|

"Epoch 4/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0783 - val_loss: 0.0780\n",

|

|||

|

|

"Epoch 5/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0782 - val_loss: 0.0779\n",

|

|||

|

|

"Epoch 6/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0782 - val_loss: 0.0780\n",

|

|||

|

|

"Epoch 7/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0781 - val_loss: 0.0779\n",

|

|||

|

|

"Epoch 8/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0781 - val_loss: 0.0778\n",

|

|||

|

|

"Epoch 9/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0781 - val_loss: 0.0778\n",

|

|||

|

|

"Epoch 10/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0780 - val_loss: 0.0778\n",

|

|||

|

|

"Epoch 11/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0780 - val_loss: 0.0777\n",

|

|||

|

|

"Epoch 12/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0780 - val_loss: 0.0777\n",

|

|||

|

|

"Epoch 13/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0779 - val_loss: 0.0777\n",

|

|||

|

|

"Epoch 14/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0779 - val_loss: 0.0777\n",

|

|||

|

|

"Epoch 15/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0779 - val_loss: 0.0775\n",

|

|||

|

|

"Epoch 16/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0778 - val_loss: 0.0775\n",

|

|||

|

|

"Epoch 17/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0778 - val_loss: 0.0776\n",

|

|||

|

|

"Epoch 18/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0778 - val_loss: 0.0776\n",

|

|||

|

|

"Epoch 19/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0777 - val_loss: 0.0775\n",

|

|||

|

|

"Epoch 20/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0777 - val_loss: 0.0775\n",

|

|||

|

|

"Epoch 21/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0777 - val_loss: 0.0775\n",

|

|||

|

|

"Epoch 22/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0776 - val_loss: 0.0775\n",

|

|||

|

|

"Epoch 23/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0776 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 24/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0776 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 25/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0776 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 26/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0775 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 27/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0775 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 28/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0775 - val_loss: 0.0773\n",

|

|||

|

|

"Epoch 29/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0775 - val_loss: 0.0773\n",

|

|||

|

|

"Epoch 30/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0774 - val_loss: 0.0773\n",

|

|||

|

|

"Epoch 31/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0774 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 32/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0774 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 33/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0774 - val_loss: 0.0773\n",

|

|||

|

|

"Epoch 34/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0774 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 35/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0774 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 36/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0773 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 37/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0773 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 38/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0773 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 39/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0773 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 40/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0773 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 41/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0773 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 42/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0773 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 43/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0772 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 44/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0772 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 45/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0772 - val_loss: 0.0770\n",

|

|||

|

|

"Epoch 46/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0772 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 47/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0772 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 48/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0771 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 49/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0771 - val_loss: 0.0771\n",

|

|||

|

|

"Epoch 50/50\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0771 - val_loss: 0.0770\n"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"data": {

|

|||

|

|

"text/plain": [

|

|||

|

|

"<keras.callbacks.History at 0x7f5274368700>"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

"execution_count": 23,

|

|||

|

|

"metadata": {},

|

|||

|

|

"output_type": "execute_result"

|

|||

|

|

}

|

|||

|

|

],

|

|||

|

|

"source": [

|

|||

|

|

"# Entraînement de l'auto-encodeur. On utilise ici les données de test \n",

|

|||

|

|

"# pour surveiller l'évolution de l'erreur de reconstruction sur des données \n",

|

|||

|

|

"# non utilisées pendant l'entraînement et ainsi détecter le sur-apprentissage.\n",

|

|||

|

|

"autoencoder.fit(x_train, x_train,\n",

|

|||

|

|

" epochs=50,\n",

|

|||

|

|

" batch_size=128,\n",

|

|||

|

|

" shuffle=True,\n",

|

|||

|

|

" validation_data=(x_test, x_test))"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "ohexDvCYrahC"

|

|||

|

|

},

|

|||

|

|

"source": [

|

|||

|

|

"Le code suivant affiche des exemples d'images de la base de test (1e ligne) et de leur reconstruction (2e ligne)."

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 16,

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "2SC9R1TRTUgN"

|

|||

|

|

},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"data": {

|

|||

|

|

"image/png": "iVBORw0KGgoAAAANSUhEUgAABG0AAADnCAYAAACkCqtqAAAAOXRFWHRTb2Z0d2FyZQBNYXRwbG90bGliIHZlcnNpb24zLjUuMSwgaHR0cHM6Ly9tYXRwbG90bGliLm9yZy/YYfK9AAAACXBIWXMAAAsTAAALEwEAmpwYAAA9UklEQVR4nO3debxN9f7H8e9JEw3HLMosJWPI0IjcMkcoUWlQ6qrokgYqqdyuSkkDupEpkSZlatQkRIbMP8qUOXMhw/n90aPP/Xy+9t72OfbeZ529X8+/3sv3e/ZZnbXX2muvvp/vNy0jI8MBAAAAAAAgWE7I7h0AAAAAAADA0XhoAwAAAAAAEEA8tAEAAAAAAAggHtoAAAAAAAAEEA9tAAAAAAAAAoiHNgAAAAAAAAF0YmY6p6WlsT54NsnIyEiLxetwDLPVtoyMjEKxeCGOY/bhXEwKnItJgHMxKXAuJgHOxaTAuZgEOBeTQshzkZE2QOKsye4dAOCc41wEgoJzEQgGzkUgGEKeizy0AQAAAAAACCAe2gAAAAAAAAQQD20AAAAAAAACiIc2AAAAAAAAAcRDGwAAAAAAgADioQ0AAAAAAEAA8dAGAAAAAAAggHhoAwAAAAAAEEAnZvcOIDX16NFDcu7cuU1blSpVJLdp0ybsa7z22muSv//+e9M2atSo491FAAAAAACyFSNtAAAAAAAAAoiHNgAAAAAAAAHEQxsAAAAAAIAAYk4bJMy4ceMkR5qrRjty5EjYts6dO0tu2LChafvqq68kr127NtpdRDYrX7682V62bJnkrl27Sh40aFDC9imVnXbaaZKfffZZyfrcc865uXPnSm7btq1pW7NmTZz2DgAAIHvky5dPcokSJaL6Gf+e6P7775e8aNEiyStWrDD9FixYkJVdRBJhpA0AAAAAAEAA8dAGAAAAAAAggCiPQtzocijnoi+J0iUx06ZNk1ymTBnTr3nz5pLLli1r2jp06CD53//+d1S/F9nvwgsvNNu6PG79+vWJ3p2UV7RoUcl33HGHZL9ssUaNGpKbNWtm2l555ZU47R206tWrS37vvfdMW6lSpeL2e6+66iqzvXTpUsnr1q2L2+/FsenPSOecmzhxouR77rlH8uDBg02/w4cPx3fHklDhwoUljx8/XvKMGTNMv6FDh0pevXp13Pfrb+np6Wb78ssvlzx16lTJBw8eTNg+ATlB06ZNJbdo0cK01atXT3K5cuWiej2/7KlkyZKSTznllLA/lytXrqheH8mLkTYAAAAAAAABxEMbAAAAAACAAKI8CjFVs2ZNya1atQrbb/HixZL94Ybbtm2TvHfvXsknn3yy6Tdz5kzJVatWNW0FChSIco8RJNWqVTPbv//+u+T3338/wXuTegoVKmS2R4wYkU17gsy6+uqrJUcaYh1rfgnObbfdJrldu3YJ2w/8RX/2vfrqq2H7vfzyy5KHDRtm2vbt2xf7HUsyetUY5+w9jS5F2rx5s+mXXSVReoU/5+y1Xpe3rly5Mv47lsOceeaZZluX3FeqVEmyv4oppWbBpqdV6NKli2RdCu6cc7lz55aclpZ23L/XXyUViBYjbQAAAAAAAAKIhzYAAAAAAAABxEMbAAAAAACAAMrWOW38JaB1HeGGDRtM2/79+yWPGTNG8qZNm0w/6nGzl14i2K/91DXfev6FjRs3RvXa3bt3N9sXXHBB2L6TJk2K6jWR/XRNuF6G1jnnRo0alejdSTn33Xef5JYtW5q2WrVqZfr19FKyzjl3wgn/+38DCxYskPz1119n+rVhnXji/z7CmzRpki374M+V8a9//UvyaaedZtr0HFWID33+nXPOOWH7jR07VrK+v0J4BQsWlDxu3DjTlj9/fsl6LqF77703/jsWRu/evSWXLl3atHXu3Fky981H69Chg+Snn37atBUvXjzkz/hz3/z222+x3zHEjL4+du3aNa6/a9myZZL1dyHEjl5yXV+rnbNzrOpl2p1z7siRI5IHDx4s+bvvvjP9gnCdZKQNAAAAAABAAPHQBgAAAAAAIICytTyqf//+ZrtUqVJR/Zwe1rlnzx7TlshhZ+vXr5fs/7fMmTMnYfsRJB999JFkPVTNOXustm/fnunX9pePPemkkzL9Ggie888/X7JfTuEPQUfsvfDCC5L1MNGsuvbaa8Nur1mzRvL1119v+vllNji2+vXrS65bt65k//Monvylj3XZap48eUwb5VGx5y/v3qtXr6h+TpeeZmRkxHSfklX16tUl+0Pstb59+yZgb45WsWJFs61Lyt9//33Txmfr0XS5zIsvvii5QIECpl+482XQoEFmW5d7Z+WeF9HxS2F0qZMucZk6darpd+DAAcm7du2S7H9O6fvSTz75xLQtWrRI8qxZsyTPmzfP9Nu3b1/Y10f09HQKztlzTN9r+u+JaNWuXVvyoUOHTNvy5cslf/vtt6ZNv+f+/PPPLP3uaDDSBgAAAAAAIIB4aAMAAAAAABBAPLQBAAAAAAAIoGyd00Yv8e2cc1WqVJG8dOlS01ahQgXJkeqK69SpI3ndunWSwy3RF4quY9u6datkvZy1b+3atWY7Vee00fT8FVn1wAMPSC5fvnzYfrqWNNQ2gqtnz56S/fcM51F8TJ48WbJekjur9NKme/fuNW0lS5aUrJednT17tumXK1eu496PZOfXc+tlm1etWiW5X79+Cduna665JmG/C0erXLmy2a5Ro0bYvvreZsqUKXHbp2RRuHBhs926deuwfW+//XbJ+r4x3vQ8Np999lnYfv6cNv58kHCuR48ekvUS7tHy52lr1KiRZH/ZcD3/TTznwEhWkeaZqVq1qmS91LNv5syZkvX3ytWrV5t+JUqUkKznMnUuNvMA4mj6eUCXLl0k++fYmWeeGfLnf/31V7P9zTffSP7ll19Mm/4OoudWrFWrlumnrwlNmjQxbQsWLJCslw2PNUbaAAAAAAAABBAPbQAAAAAAAAIoW8ujPv/884jbmr9U29/85UarVasmWQ9zuuiii6Ler/3790tesWKFZL9kSw+V0kPTcXyaNWsmWS+defLJJ5t+W7Zskfzwww+btj/++CNOe4fjVapUKbNds2ZNyfp8c46lEWPliiuuMNvnnXeeZD28N9qhvv7wTz08WS+d6ZxzDRo0kBxpOeK7775b8muvvRbVfqSa3r17m209RFwPxfdL1GJNf/b57y2GiydWpJIdn19GgMief/55s33jjTdK1veXzjn3zjvvJGSffJdddpnkIkWKmLY333xT8ujRoxO1SzmGLt11zrlbb701ZL+FCxea7c2bN0tu2LBh2NdPT0+XrEuvnHNuzJgxkjdt2nTsnU1x/v3/W2+9JVmXQzlny4MjlQxqfkmU5k9/gdgbMmSI2dZlbZGW79bPDX766SfJjzzyiOmnv9f7Lr74Ysn6PnTYsGGmn36+oK8Bzjn3yiuvSH733Xclx7pUlpE2AAAAAAAAAcRDGwAAAAAAgADK1vKoWNixY4fZ/vLLL0P2i1R6FYkeeuyXYumhWOPGjcvS6+NoulzGHxKp6b/5V199Fdd9Quz45RRaIlfdSHa6DO3tt982bZGGm2p6NS895POJJ54w/SKVI+rXuPPOOyUXKlTI9Ovfv7/kU0891bS9/PLLkg8ePHis3U4qbdq0keyvWLBy5UrJiVxpTZe5+eVQ06dPl7xz584E7VHquvzyy8O2+avSRCpPxNEyMjLMtn6vb9iwwbTFcwWg3Llzm2099P+f//ynZH9/b7vttrjtUzLQ5Q7OOXfGGWdI1qvN+Pcs+vPphhtukOyXZJQtW1byWWedZdo+/PBDyY0bN5a8ffv2aHY9JZx++umS/SkQ9DQK27ZtM23PPfecZKZKCA7/vk6v2tSpUyfTlpaWJll/L/BL55999lnJWZ1OoUCBApL1KqZ9+vQx/fQ0LX5pZaIw0gYAAAAAACCAeGgDAAAAAAAQQDy0AQAAAAAACKAcP6dNPBQuXFjyq6++KvmEE+wzLr0cNXWoWffBBx+Y7auuuipkv5EjR5ptf/lb5AyVK1cO26bnNcHxOfHE/13eo53Dxp8bql27d

|

|||

|

|

"text/plain": [

|

|||

|

|

"<Figure size 1440x288 with 20 Axes>"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

"metadata": {

|

|||

|

|

"needs_background": "light"

|

|||

|

|

},

|

|||

|

|

"output_type": "display_data"

|

|||

|

|

}

|

|||

|

|

],

|

|||

|

|

"source": [

|

|||

|

|

"import matplotlib.pyplot as plt\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Prédiction des données de test\n",

|

|||

|

|

"decoded_imgs = autoencoder.predict(x_test)\n",

|

|||

|

|

"\n",

|

|||

|

|

"\n",

|

|||

|

|

"n = 10\n",

|

|||

|

|

"plt.figure(figsize=(20, 4))\n",

|

|||

|

|

"for i in range(n):\n",

|

|||

|

|

" # Affichage de l'image originale\n",

|

|||

|

|

" ax = plt.subplot(2, n, i+1)\n",

|

|||

|

|

" plt.imshow(x_test[i].reshape(28, 28))\n",

|

|||

|

|

" plt.gray()\n",

|

|||

|

|

" ax.get_xaxis().set_visible(False)\n",

|

|||

|

|

" ax.get_yaxis().set_visible(False)\n",

|

|||

|

|

"\n",

|

|||

|

|

" # Affichage de l'image reconstruite par l'auto-encodeur\n",

|

|||

|

|

" ax = plt.subplot(2, n, i+1 + n)\n",

|

|||

|

|

" plt.imshow(decoded_imgs[i].reshape(28, 28))\n",

|

|||

|

|

" plt.gray()\n",

|

|||

|

|

" ax.get_xaxis().set_visible(False)\n",

|

|||

|

|

" ax.get_yaxis().set_visible(False)\n",

|

|||

|

|

"plt.show()"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "4oV4eKk4p4Eg"

|

|||

|

|

},

|

|||

|

|

"source": [

|

|||

|

|

"# Travail à faire"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "markdown",

|

|||

|

|

"metadata": {

|

|||

|

|

"id": "zjMnHWNtrgwZ"

|

|||

|

|

},

|

|||

|

|

"source": [

|

|||

|

|

"Travail à faire\n",

|

|||

|

|

"\n",

|

|||

|

|

"1. Pour commencer, observez les résultats obtenus avec le code fourni. Les résultats semblent imparfaits, les images reconstruites sont bruitées. Modifiez le code fourni pour transformer le problème de régression en classification binaire. Les résultats devraient être bien meilleurs ! Conservez cette formulation (même si elle est non standard) pour la suite.\n",

|

|||

|

|

"2. Avec la dimension d'espace latent qui vous est fournie, on observe une (relativement) faible erreur de reconstruction. Tracez une **courbe** (avec seulement quelques points) qui montre l'évolution de l'**erreur de reconstruction en fonction de la dimension de l'espace latent**. Quelle semble être la dimension minimale de l'espace latent qui permet encore d'observer une reconstruction raisonnable des données (avec le réseau qui vous est fourni) ?\n",

|

|||

|

|

"3. Pour diminuer encore plus la dimension de l'espace latent, il est nécessaire d'augmenter la capacité des réseaux encodeur et décodeur. Cherchez à nouveau la dimension minimale de l'espace latent qui permet d'observer une bonne reconstruction des données, mais en augmentant à l'envi la capacité de votre auto-encodeur.\n",

|

|||

|

|

"4. Écrivez une fonction qui, étant donné deux images de votre espace de test $I_1$ et $I_2$, réalise l'interpolation (avec, par exemple, 10 étapes) entre la représentation latente ($z_1 = $encoder($I_1$) et $z_2 = $encoder($I_2$)) de ces deux données, et génère les images $I_i$ correspondant aux représentations latentes intermédiaires $z_i$. En pseudo python, cela donne : \n",

|

|||

|

|

"\n",

|

|||

|

|

"```python\n",

|

|||

|

|

"for i in range(10):\n",

|

|||

|

|

" z_i = z1 + i*(z2-z1)/10\n",

|

|||

|

|

" I_i = decoder(z_i)\n",

|

|||

|

|

"```\n",

|

|||

|

|

"Testez cette fonction avec un auto-encodeur avec une faible erreur de reconstruction, sur deux données présentant le même chiffre écrit différemment, puis deux chiffres différents.\n",

|

|||

|

|

"5. Pour finir, le code qui vous est fourni dans la suite permet de télécharger et de préparer une [base de données de visages](http://vis-www.cs.umass.edu/lfw/). ATTENTION : ici les images sont de taille $32\\times32$, en couleur, et comportent donc 3 canaux (contrairement aux images de MNIST, qui n'en comptent qu'un). Par analogie avec la question précédente, on pourrait grâce à la représentation latente apprise par un auto-encodeur, réaliser un morphing entre deux visages. Essayez d'abord d'entraîner un auto-encodeur à obtenir une erreur de reconstruction faible. Qu'observe-t-on ?\n",

|

|||

|

|

"\n"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 33,

|

|||

|

|

"metadata": {},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"name": "stdout",

|

|||

|

|

"output_type": "stream",

|

|||

|

|

"text": [

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"469/469 [==============================] - 3s 6ms/step - loss: 0.2008 - val_loss: 0.1536\n",

|

|||

|

|

"Epoch 2/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.1478 - val_loss: 0.1415\n",

|

|||

|

|

"Epoch 3/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.1400 - val_loss: 0.1366\n",

|

|||

|

|

"Epoch 4/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.1360 - val_loss: 0.1335\n",

|

|||

|

|

"Epoch 5/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.1333 - val_loss: 0.1311\n",

|

|||

|

|

"Epoch 6/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1312 - val_loss: 0.1292\n",

|

|||

|

|

"Epoch 7/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1295 - val_loss: 0.1278\n",

|

|||

|

|

"Epoch 8/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1282 - val_loss: 0.1269\n",

|

|||

|

|

"Epoch 9/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1272 - val_loss: 0.1258\n",

|

|||

|

|

"Epoch 10/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1263 - val_loss: 0.1252\n",

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1883 - val_loss: 0.1332\n",

|

|||

|

|

"Epoch 2/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1244 - val_loss: 0.1169\n",

|

|||

|

|

"Epoch 3/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1158 - val_loss: 0.1118\n",

|

|||

|

|

"Epoch 4/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1117 - val_loss: 0.1087\n",

|

|||

|

|

"Epoch 5/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1089 - val_loss: 0.1065\n",

|

|||

|

|

"Epoch 6/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1069 - val_loss: 0.1049\n",

|

|||

|

|

"Epoch 7/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1053 - val_loss: 0.1036\n",

|

|||

|

|

"Epoch 8/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1040 - val_loss: 0.1023\n",

|

|||

|

|

"Epoch 9/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1029 - val_loss: 0.1014\n",

|

|||

|

|

"Epoch 10/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1020 - val_loss: 0.1008\n",

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1781 - val_loss: 0.1176\n",

|

|||

|

|

"Epoch 2/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1092 - val_loss: 0.1010\n",

|

|||

|

|

"Epoch 3/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0992 - val_loss: 0.0951\n",

|

|||

|

|

"Epoch 4/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0944 - val_loss: 0.0917\n",

|

|||

|

|

"Epoch 5/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0916 - val_loss: 0.0894\n",

|

|||

|

|

"Epoch 6/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0895 - val_loss: 0.0878\n",

|

|||

|

|

"Epoch 7/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0880 - val_loss: 0.0865\n",

|

|||

|

|

"Epoch 8/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0868 - val_loss: 0.0854\n",

|

|||

|

|

"Epoch 9/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0858 - val_loss: 0.0845\n",

|

|||

|

|

"Epoch 10/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0850 - val_loss: 0.0840\n",

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1735 - val_loss: 0.1119\n",

|

|||

|

|

"Epoch 2/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1029 - val_loss: 0.0943\n",

|

|||

|

|

"Epoch 3/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0923 - val_loss: 0.0885\n",

|

|||

|

|

"Epoch 4/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0872 - val_loss: 0.0844\n",

|

|||

|

|

"Epoch 5/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0839 - val_loss: 0.0817\n",

|

|||

|

|

"Epoch 6/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0818 - val_loss: 0.0799\n",

|

|||

|

|

"Epoch 7/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0802 - val_loss: 0.0789\n",

|

|||

|

|

"Epoch 8/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0789 - val_loss: 0.0774\n",

|

|||

|

|

"Epoch 9/10\n",

|

|||

|

|

"469/469 [==============================] - 3s 5ms/step - loss: 0.0777 - val_loss: 0.0766\n",

|

|||

|

|

"Epoch 10/10\n",

|

|||

|

|

"469/469 [==============================] - 3s 5ms/step - loss: 0.0768 - val_loss: 0.0757\n",

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"469/469 [==============================] - 3s 5ms/step - loss: 0.1649 - val_loss: 0.1054\n",

|

|||

|

|

"Epoch 2/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0978 - val_loss: 0.0902\n",

|

|||

|

|

"Epoch 3/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0876 - val_loss: 0.0835\n",

|

|||

|

|

"Epoch 4/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0829 - val_loss: 0.0801\n",

|

|||

|

|

"Epoch 5/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0799 - val_loss: 0.0779\n",

|

|||

|

|

"Epoch 6/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0779 - val_loss: 0.0765\n",

|

|||

|

|

"Epoch 7/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0763 - val_loss: 0.0751\n",

|

|||

|

|

"Epoch 8/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0752 - val_loss: 0.0745\n",

|

|||

|

|

"Epoch 9/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0743 - val_loss: 0.0734\n",

|

|||

|

|

"Epoch 10/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 5ms/step - loss: 0.0734 - val_loss: 0.0726\n",

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1591 - val_loss: 0.1011\n",

|

|||

|

|

"Epoch 2/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0938 - val_loss: 0.0874\n",

|

|||

|

|

"Epoch 3/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0848 - val_loss: 0.0814\n",

|

|||

|

|

"Epoch 4/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0804 - val_loss: 0.0779\n",

|

|||

|

|

"Epoch 5/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0777 - val_loss: 0.0759\n",

|

|||

|

|

"Epoch 6/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0758 - val_loss: 0.0743\n",

|

|||

|

|

"Epoch 7/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0744 - val_loss: 0.0731\n",

|

|||

|

|

"Epoch 8/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0732 - val_loss: 0.0721\n",

|

|||

|

|

"Epoch 9/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0723 - val_loss: 0.0713\n",

|

|||

|

|

"Epoch 10/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0716 - val_loss: 0.0710\n",

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.1573 - val_loss: 0.0999\n",

|

|||

|

|

"Epoch 2/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0931 - val_loss: 0.0858\n",

|

|||

|

|

"Epoch 3/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0840 - val_loss: 0.0804\n",

|

|||

|

|

"Epoch 4/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0796 - val_loss: 0.0772\n",

|

|||

|

|

"Epoch 5/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0767 - val_loss: 0.0748\n",

|

|||

|

|

"Epoch 6/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0749 - val_loss: 0.0734\n",

|

|||

|

|

"Epoch 7/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0735 - val_loss: 0.0724\n",

|

|||

|

|

"Epoch 8/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0725 - val_loss: 0.0716\n",

|

|||

|

|

"Epoch 9/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0717 - val_loss: 0.0711\n",

|

|||

|

|

"Epoch 10/10\n",

|

|||

|

|

"469/469 [==============================] - 2s 4ms/step - loss: 0.0711 - val_loss: 0.0704\n"

|

|||

|

|

]

|

|||

|

|

}

|

|||

|

|

],

|

|||

|

|

"source": [

|

|||

|

|

"from keras.layers import Input, Dense\n",

|

|||

|

|

"from keras.models import Model\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Dimension de l'entrée\n",

|

|||

|

|

"input_img = Input(shape=(784,))\n",

|

|||

|

|

"\n",

|

|||

|

|

"errors = []\n",

|

|||

|

|

"latent_dims = [8, 16, 32, 64, 128, 256, 512]\n",

|

|||

|

|

"for latent_dim in latent_dims:\n",

|

|||

|

|

" # Définition d'un encodeur\n",

|

|||

|

|

" x = Dense(128, activation='relu')(input_img)\n",

|

|||

|

|

" encoded = Dense(latent_dim, activation='linear')(x)\n",

|

|||

|

|

"\n",

|

|||

|

|

" # Définition d'un decodeur\n",

|

|||

|

|

" decoder_input = Input(shape=(latent_dim,))\n",

|

|||

|

|

" x = Dense(128, activation='relu')(decoder_input)\n",

|

|||

|

|

" decoded = Dense(784, activation='sigmoid')(x)\n",

|

|||

|

|

"\n",

|

|||

|

|

" # Construction d'un modèle séparé pour pouvoir accéder aux décodeur et encodeur\n",

|

|||

|

|

" encoder = Model(input_img, encoded)\n",

|

|||

|

|

" decoder = Model(decoder_input, decoded)\n",

|

|||

|

|

"\n",

|

|||

|

|

" # Construction du modèle de l'auto-encodeur\n",

|

|||

|

|

" encoded = encoder(input_img)\n",

|

|||

|

|

" decoded = decoder(encoded)\n",

|

|||

|

|

" autoencoder = Model(input_img, decoded)\n",

|

|||

|

|

"\n",

|

|||

|

|

" autoencoder.compile(optimizer='Adam', loss='bce')\n",

|

|||

|

|

"\n",

|

|||

|

|

" autoencoder.fit(\n",

|

|||

|

|

" x_train, x_train,\n",

|

|||

|

|

" epochs=10,\n",

|

|||

|

|

" batch_size=128,\n",

|

|||

|

|

" shuffle=True,\n",

|

|||

|

|

" validation_data=(x_test, x_test)\n",

|

|||

|

|

" )\n",

|

|||

|

|

"\n",

|

|||

|

|

" errors.append(autoencoder.history.history[\"val_loss\"][-1])"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 35,

|

|||

|

|

"metadata": {},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"data": {

|

|||

|

|

"text/plain": [

|

|||

|

|

"[<matplotlib.lines.Line2D at 0x7f521837f280>]"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

"execution_count": 35,

|

|||

|

|

"metadata": {},

|

|||

|

|

"output_type": "execute_result"

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"data": {

|

|||

|

|

"image/png": "iVBORw0KGgoAAAANSUhEUgAABIcAAAI/CAYAAADtOLm5AAAAOXRFWHRTb2Z0d2FyZQBNYXRwbG90bGliIHZlcnNpb24zLjUuMSwgaHR0cHM6Ly9tYXRwbG90bGliLm9yZy/YYfK9AAAACXBIWXMAAAsTAAALEwEAmpwYAAA/n0lEQVR4nO3deXhd93kf+O8PwL0ELhdcUKI2EpTkWJYt2yIkUY4zSdM4q9JkrLS1EzuLtzROn8adtGkz4y6TtG4zmbTNpGnjSe3EW5zFcd2k0ZO4cd0403RNREmUZMmbrFgkJdmiRYCiuAM48wcuSAAERUgk7rnA/Xyehw/uOffcgxeyjw19+f7eX6mqKgAAAAD0p4G6CwAAAACgPsIhAAAAgD4mHAIAAADoY8IhAAAAgD4mHAIAAADoY8IhAAAAgD42VHcBS11++eXVddddV3cZAAAAAOvGPffc89WqqrYt917PhUPXXXdd9uzZU3cZAAAAAOtGKeWx871nWRkAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4RAAAABAHxMOAQAAAPQx4dAq+dd/9IV8z7v/W91lAAAAADwn4dAqefbUdB5+4plUVVV3KQAAAADnJRxaJWOtZk7NzObYqZm6SwEAAAA4L+HQKhlrNZIkU8dP11wJAAAAwPkJh1bJ6EgzSTJ59FTNlQAAAACcn3BolZzpHDqmcwgAAADoXcKhVTK2sdM5dEznEAAAANC7hEOrpG3mEAAAALAGCIdWSbszc2jKzCEAAACghwmHVklzaCAbm4OZNHMIAAAA6GHCoVXUbjUzdVznEAAAANC7hEOrqN1q2K0MAAAA6GnCoVU01mrarQwAAADoacKhVaRzCAAAAOh1wqFVNNZqZkrnEAAAANDDhEOrqN1q5PDx05mdreouBQAAAGBZwqFV1G41M1slz5ywtAwAAADoTcKhVTTWaiRJJs0dAgAAAHqUcGgVtTvhkLlDAAAAQK8SDq2idquZJHYsAwAAAHqWcGgVjXXCoUmdQwAAAECPEg6torEzy8p0DgEAAAC9STi0ijYPN1KKmUMAAABA7xIOraLBgZLRkYbdygAAAICeJRxaZWOtpplDAAAAQM8SDq2y0ZFGDh/XOQQAAAD0JuHQKhtrNXQOAQAAAD1LOLTKxlrNTB7VOQQAAAD0JuHQKmu3mpaVAQAAAD1LOLTK2q1Gnj05nVPTs3WXAgAAAHAO4dAqG2s1kiRTx80dAgAAAHqPcGiVtVvNJMnUMUvLAAAAgN4jHFpl7fnOIeEQAAAA0IOEQ6tsrNM5ZDt7AAAAoBcJh1bZ2c4h4RAAAADQe4RDq+xs55BlZQAAAEDvEQ6tslZzMI3BYuYQAAAA0JOEQ6uslJJ2q2lZGQAAANCThENdMNZqGEgNAAAA9CThUBe0R5qWlQEAAAA9STjUBe1WQzgEAAAA9CThUBeMtZqWlQEAAAA9STjUBe2Nc51DVVXVXQoAAADAIsKhLmiPNHNqZjbHT8/UXQoAAADAIisKh0opd5RSPldKeaSU8s5l3v/GUsq9pZTpUsrrFpyfKKX8j1LKQ6WUB0op33cpi18rxlqNJMmkuUMAAABAj7lgOFRKGUzy7iTfmeSmJG8spdy05LJ9Sd6S5DeXnD+W5E1VVb08yR1J/mUppX2RNa857VYzSTJ51NwhAAAAoLcMreCaVyV5pKqqR5OklPKRJHcmeXj+gqqqvtR5b3bhB6uq+vyC10+UUp5Ksi3J1MUWvpbMdw4dPq5zCAAAAOgtK1lWtj3J/gXHBzrnnpdSyquSNJN88fl+dq070zlkxzIAAACgx3RlIHUp5eokH07y1qqqZpd5/+2llD2llD0HDx7sRkldZeYQAAAA0KtWEg49nmR8wfGOzrkVKaVsSfIHSf5BVVX/c7lrqqp6b1VVu6uq2r1t27aV3nrNGO2EQ1NmDgEAAAA9ZiXh0N1JbiilXF9KaSZ5Q5K7VnLzzvW/m+TXqqr62Asvc23bMDSYVnMwU2YOAQAAAD3mguFQVVXTSd6R5BNJPpPko1VVPVRKeVcp5bVJUkq5vZRyIMnrk7ynlPJQ5+Pfm+Qbk7yllLK382diNX6QXjfWapo5BAAAAPSclexWlqqqPp7k40vO/dSC13dnbrnZ0s/9epJfv8ga14V2q5EpM4cAAACAHtOVgdToHAIAAAB6k3CoS0ZbjRzWOQQAAAD0GOFQl4y1GjqHAAAAgJ4jHOqSsVYzh4+fzuxsVXcpAAAAAGcIh7pkdKSR2So5cmK67lIAAAAAzhAOdclYq5kklpYBAAAAPUU41CVjGxtJhEMAAABAbxEOdUm70zk0ZccyAAAAoIcIh7qkPTLXOTR1XOcQAAAA0DuEQ11yZubQUZ1DAAAAQO8QDnXJlpFGSkmmzBwCAAAAeohwqEsGB0q2DDcydVznEAAAANA7hENdNNZqZNJAagAAAKCHCIe6qN1qWlYGAAAA9BThUBfNdQ4JhwAAAIDeIRzqornOIcvKAAAAgN4hHOqidqshHAIAAAB6inCoi8ZazTx7cjqnpmfrLgUAAAAgiXCoq9qtRpJk6ri5QwAAAEBvEA51UbvVTJIctrQMAAAA6BHCoS4a63QOTQqHAAAAgB4hHOqisU7nkO3sAQAAgF4hHOqi0ZG5ziHLygAAAIBeIRzqorGNOocAAACA3iIc6qKNzcE0BouZQwAAAEDPEA51USkloyPNTOkcAgAAAHqEcKjLxlqNTOkcAgAAAHqEcKjLxlpNM4cAAACAniEc6rK2ziEAAACghwiHuqzdamTquM4hAAAAoDcIh7psblnZ6VRVVXcpAAAAAMKhbmu3mjk1PZvjp2fqLgUAAABAONRtY61GkmTS3CEAAACgBwiHuqzdCYem7FgGAAAA9ADhUJe1W80ksWMZAAAA0BOEQ1021gmHJnUOAQAAAD1AONRlbTOHAAAAgB4iHOqy+XDosM4hAAAAoAcIh7psw9BgWs1BnUMAAABATxAO1WCs1TRzCAAAAOgJwqEajI40cljnEAAAANADhEM1GNvY0DkEAAAA9AThUA3arWamdA4BAAAAPUA4VIP2iM4hAAAAoDcIh2ow1mrm8PHTmZ2t6i4FAAAA6HPCoRq0W43MVsmRE9N1lwIAAAD0OeFQDcZazSSxtAwAAAConXCoBu1WI0kyddxQagAAAKBewqEatHUOAQAAAD1COFSDsfnOIeEQAAAAUDPhUA3OdA4dtawMAAAAqJdwqAajI42UYuYQAAAAUD/hUA0GB0q2DDcsKwMAAABqJxyqyVirkcljOocAAACAegmHajLaauocAgAAAGonHKrJWKuRKZ1DAAAAQM2EQzUZazUzqXMIAAAAqJlwqCajIzqHAAAAgPoJh2oy1mrm2ZPTOT0zW3cpAAAAQB8TDtVkbGMjSXQPAQAAALUSDtWk3WomiR3LAAAAgFoJh2rSHpnrHJrUOQQAAADUSDhUkzGdQwAAAEAPEA7VpN0ycwgAAACon3CoJvPh0KTOIQAAAKBGwqGabNowlKGBkqnjOocAAACA+giHalJKSbvVNHMIAAAAqJVwqEZjrUYmj+ocAgAAAOojHKpRu9UwcwgAAAColXCoRu1WM4fNHAIAAABqJByq0ZjOIQAAAKBmwqEatVvNTB47naqq6i4FAAAA6FPCoRq1W42cmp7N8dMzdZcCAAAA9CnhUI3GWs0kydQxc4cAAACAegiHajTWaiSJuUMAAABAbYRDNRod0TkEAAAA1Es4VKOxjXOdQ8IhAAAAoC7CoRrNzxyyrAwAAACoi3CoRqMj851DwiEAAACgHsKhGg03BjPSGMykZWUAAABATYRDNRtrNcwcAgAAAGqzonColHJHKeVzpZRHSinvXOb9byyl3FtKmS6lvG7Je39YSpkqpfz+p

|

|||

|

|

"text/plain": [

|

|||

|

|

"<Figure size 1440x720 with 1 Axes>"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

"metadata": {

|

|||

|

|

"needs_background": "light"

|

|||

|

|

},

|

|||

|

|

"output_type": "display_data"

|

|||

|

|

}

|

|||

|

|

],

|

|||

|

|

"source": [

|

|||

|

|

"plt.figure(figsize=(20, 10))\n",

|

|||

|

|

"plt.plot(latent_dims, errors)"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 52,

|

|||

|

|

"metadata": {},

|

|||

|

|

"outputs": [],

|

|||

|

|

"source": [

|

|||

|

|

"from keras.datasets import mnist\n",

|

|||

|

|

"import numpy as np\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Chargement et normalisation (entre 0 et 1) des données de la base de données MNIST\n",

|

|||

|

|

"(x_train, _), (x_test, _) = mnist.load_data()\n",

|

|||

|

|

"\n",

|

|||

|

|

"x_train = x_train.astype('float32') / 255.0\n",

|

|||

|

|

"x_test = x_test.astype('float32') / 255.0"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"cell_type": "code",

|

|||

|

|

"execution_count": 58,

|

|||

|

|

"metadata": {},

|

|||

|

|

"outputs": [

|

|||

|

|

{

|

|||

|

|

"name": "stdout",

|

|||

|

|

"output_type": "stream",

|

|||

|

|

"text": [

|

|||

|

|

"Model: \"model_83\"\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

" Layer (type) Output Shape Param # \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

" input_53 (InputLayer) [(None, 28, 28, 1)] 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_37 (Conv2D) (None, 28, 28, 64) 640 \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_38 (Conv2D) (None, 28, 28, 64) 36928 \n",

|

|||

|

|

" \n",

|

|||

|

|

" max_pooling2d_8 (MaxPooling (None, 14, 14, 64) 0 \n",

|

|||

|

|

" 2D) \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_39 (Conv2D) (None, 14, 14, 128) 73856 \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_40 (Conv2D) (None, 14, 14, 128) 147584 \n",

|

|||

|

|

" \n",

|

|||

|

|

" max_pooling2d_9 (MaxPooling (None, 7, 7, 128) 0 \n",

|

|||

|

|

" 2D) \n",

|

|||

|

|

" \n",

|

|||

|

|

" flatten_2 (Flatten) (None, 6272) 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" dense_114 (Dense) (None, 5) 31365 \n",

|

|||

|

|

" \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

"Total params: 290,373\n",

|

|||

|

|

"Trainable params: 290,373\n",

|

|||

|

|

"Non-trainable params: 0\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

"None\n",

|

|||

|

|

"Model: \"model_84\"\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

" Layer (type) Output Shape Param # \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

" input_54 (InputLayer) [(None, 5)] 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" dense_115 (Dense) (None, 6272) 37632 \n",

|

|||

|

|

" \n",

|

|||

|

|

" reshape_2 (Reshape) (None, 7, 7, 128) 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" up_sampling2d_5 (UpSampling (None, 14, 14, 128) 0 \n",

|

|||

|

|

" 2D) \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_41 (Conv2D) (None, 14, 14, 128) 147584 \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_42 (Conv2D) (None, 14, 14, 128) 147584 \n",

|

|||

|

|

" \n",

|

|||

|

|

" up_sampling2d_6 (UpSampling (None, 28, 28, 128) 0 \n",

|

|||

|

|

" 2D) \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_43 (Conv2D) (None, 28, 28, 64) 32832 \n",

|

|||

|

|

" \n",

|

|||

|

|

" conv2d_44 (Conv2D) (None, 28, 28, 1) 577 \n",

|

|||

|

|

" \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

"Total params: 366,209\n",

|

|||

|

|

"Trainable params: 366,209\n",

|

|||

|

|

"Non-trainable params: 0\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

"None\n",

|

|||

|

|

"Model: \"model_85\"\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

" Layer (type) Output Shape Param # \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

" input_53 (InputLayer) [(None, 28, 28, 1)] 0 \n",

|

|||

|

|

" \n",

|

|||

|

|

" model_83 (Functional) (None, 5) 290373 \n",

|

|||

|

|

" \n",

|

|||

|

|

" model_84 (Functional) (None, 28, 28, 1) 366209 \n",

|

|||

|

|

" \n",

|

|||

|

|

"=================================================================\n",

|

|||

|

|

"Total params: 656,582\n",

|

|||

|

|

"Trainable params: 656,582\n",

|

|||

|

|

"Non-trainable params: 0\n",

|

|||

|

|

"_________________________________________________________________\n",

|

|||

|

|

"Epoch 1/10\n",

|

|||

|

|

"116/469 [======>.......................] - ETA: 3:19 - loss: 0.2379"

|

|||

|

|

]

|

|||

|

|

},

|

|||

|

|

{

|

|||

|

|

"ename": "KeyboardInterrupt",

|

|||

|

|

"evalue": "",

|

|||

|

|

"output_type": "error",

|

|||

|

|

"traceback": [

|

|||

|

|

"\u001b[0;31m---------------------------------------------------------------------------\u001b[0m",

|

|||

|

|

"\u001b[0;31mKeyboardInterrupt\u001b[0m Traceback (most recent call last)",

|

|||

|

|

"\u001b[1;32m/home/laurent/Documents/Cours/ENSEEIHT/S9 - IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb Cell 12\u001b[0m in \u001b[0;36m<module>\u001b[0;34m\u001b[0m\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=50'>51</a>\u001b[0m autoencoder\u001b[39m.\u001b[39msummary()\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=52'>53</a>\u001b[0m \u001b[39m# Entraînement de l'auto-encodeur. On utilise ici les données de test \u001b[39;00m\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=53'>54</a>\u001b[0m \u001b[39m# pour surveiller l'évolution de l'erreur de reconstruction sur des données \u001b[39;00m\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=54'>55</a>\u001b[0m \u001b[39m# non utilisées pendant l'entraînement et ainsi détecter le sur-apprentissage.\u001b[39;00m\n\u001b[0;32m---> <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=55'>56</a>\u001b[0m autoencoder\u001b[39m.\u001b[39;49mfit(x_train, x_train,\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=56'>57</a>\u001b[0m epochs\u001b[39m=\u001b[39;49m\u001b[39m10\u001b[39;49m,\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=57'>58</a>\u001b[0m batch_size\u001b[39m=\u001b[39;49m\u001b[39m128\u001b[39;49m,\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=58'>59</a>\u001b[0m shuffle\u001b[39m=\u001b[39;49m\u001b[39mTrue\u001b[39;49;00m,\n\u001b[1;32m <a href='vscode-notebook-cell:/home/laurent/Documents/Cours/ENSEEIHT/S9%20-%20IAM/IAM2022_TP_Autoencodeurs_Sujet.ipynb#X16sZmlsZQ%3D%3D?line=59'>60</a>\u001b[0m validation_data\u001b[39m=\u001b[39;49m(x_test, x_test))\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/keras/utils/traceback_utils.py:64\u001b[0m, in \u001b[0;36mfilter_traceback.<locals>.error_handler\u001b[0;34m(*args, **kwargs)\u001b[0m\n\u001b[1;32m 62\u001b[0m filtered_tb \u001b[39m=\u001b[39m \u001b[39mNone\u001b[39;00m\n\u001b[1;32m 63\u001b[0m \u001b[39mtry\u001b[39;00m:\n\u001b[0;32m---> 64\u001b[0m \u001b[39mreturn\u001b[39;00m fn(\u001b[39m*\u001b[39;49margs, \u001b[39m*\u001b[39;49m\u001b[39m*\u001b[39;49mkwargs)\n\u001b[1;32m 65\u001b[0m \u001b[39mexcept\u001b[39;00m \u001b[39mException\u001b[39;00m \u001b[39mas\u001b[39;00m e: \u001b[39m# pylint: disable=broad-except\u001b[39;00m\n\u001b[1;32m 66\u001b[0m filtered_tb \u001b[39m=\u001b[39m _process_traceback_frames(e\u001b[39m.\u001b[39m__traceback__)\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/keras/engine/training.py:1384\u001b[0m, in \u001b[0;36mModel.fit\u001b[0;34m(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)\u001b[0m\n\u001b[1;32m 1377\u001b[0m \u001b[39mwith\u001b[39;00m tf\u001b[39m.\u001b[39mprofiler\u001b[39m.\u001b[39mexperimental\u001b[39m.\u001b[39mTrace(\n\u001b[1;32m 1378\u001b[0m \u001b[39m'\u001b[39m\u001b[39mtrain\u001b[39m\u001b[39m'\u001b[39m,\n\u001b[1;32m 1379\u001b[0m epoch_num\u001b[39m=\u001b[39mepoch,\n\u001b[1;32m 1380\u001b[0m step_num\u001b[39m=\u001b[39mstep,\n\u001b[1;32m 1381\u001b[0m batch_size\u001b[39m=\u001b[39mbatch_size,\n\u001b[1;32m 1382\u001b[0m _r\u001b[39m=\u001b[39m\u001b[39m1\u001b[39m):\n\u001b[1;32m 1383\u001b[0m callbacks\u001b[39m.\u001b[39mon_train_batch_begin(step)\n\u001b[0;32m-> 1384\u001b[0m tmp_logs \u001b[39m=\u001b[39m \u001b[39mself\u001b[39;49m\u001b[39m.\u001b[39;49mtrain_function(iterator)\n\u001b[1;32m 1385\u001b[0m \u001b[39mif\u001b[39;00m data_handler\u001b[39m.\u001b[39mshould_sync:\n\u001b[1;32m 1386\u001b[0m context\u001b[39m.\u001b[39masync_wait()\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/tensorflow/python/util/traceback_utils.py:150\u001b[0m, in \u001b[0;36mfilter_traceback.<locals>.error_handler\u001b[0;34m(*args, **kwargs)\u001b[0m\n\u001b[1;32m 148\u001b[0m filtered_tb \u001b[39m=\u001b[39m \u001b[39mNone\u001b[39;00m\n\u001b[1;32m 149\u001b[0m \u001b[39mtry\u001b[39;00m:\n\u001b[0;32m--> 150\u001b[0m \u001b[39mreturn\u001b[39;00m fn(\u001b[39m*\u001b[39;49margs, \u001b[39m*\u001b[39;49m\u001b[39m*\u001b[39;49mkwargs)\n\u001b[1;32m 151\u001b[0m \u001b[39mexcept\u001b[39;00m \u001b[39mException\u001b[39;00m \u001b[39mas\u001b[39;00m e:\n\u001b[1;32m 152\u001b[0m filtered_tb \u001b[39m=\u001b[39m _process_traceback_frames(e\u001b[39m.\u001b[39m__traceback__)\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/tensorflow/python/eager/def_function.py:915\u001b[0m, in \u001b[0;36mFunction.__call__\u001b[0;34m(self, *args, **kwds)\u001b[0m\n\u001b[1;32m 912\u001b[0m compiler \u001b[39m=\u001b[39m \u001b[39m\"\u001b[39m\u001b[39mxla\u001b[39m\u001b[39m\"\u001b[39m \u001b[39mif\u001b[39;00m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_jit_compile \u001b[39melse\u001b[39;00m \u001b[39m\"\u001b[39m\u001b[39mnonXla\u001b[39m\u001b[39m\"\u001b[39m\n\u001b[1;32m 914\u001b[0m \u001b[39mwith\u001b[39;00m OptionalXlaContext(\u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_jit_compile):\n\u001b[0;32m--> 915\u001b[0m result \u001b[39m=\u001b[39m \u001b[39mself\u001b[39;49m\u001b[39m.\u001b[39;49m_call(\u001b[39m*\u001b[39;49margs, \u001b[39m*\u001b[39;49m\u001b[39m*\u001b[39;49mkwds)\n\u001b[1;32m 917\u001b[0m new_tracing_count \u001b[39m=\u001b[39m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39mexperimental_get_tracing_count()\n\u001b[1;32m 918\u001b[0m without_tracing \u001b[39m=\u001b[39m (tracing_count \u001b[39m==\u001b[39m new_tracing_count)\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/tensorflow/python/eager/def_function.py:947\u001b[0m, in \u001b[0;36mFunction._call\u001b[0;34m(self, *args, **kwds)\u001b[0m\n\u001b[1;32m 944\u001b[0m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_lock\u001b[39m.\u001b[39mrelease()\n\u001b[1;32m 945\u001b[0m \u001b[39m# In this case we have created variables on the first call, so we run the\u001b[39;00m\n\u001b[1;32m 946\u001b[0m \u001b[39m# defunned version which is guaranteed to never create variables.\u001b[39;00m\n\u001b[0;32m--> 947\u001b[0m \u001b[39mreturn\u001b[39;00m \u001b[39mself\u001b[39;49m\u001b[39m.\u001b[39;49m_stateless_fn(\u001b[39m*\u001b[39;49margs, \u001b[39m*\u001b[39;49m\u001b[39m*\u001b[39;49mkwds) \u001b[39m# pylint: disable=not-callable\u001b[39;00m\n\u001b[1;32m 948\u001b[0m \u001b[39melif\u001b[39;00m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_stateful_fn \u001b[39mis\u001b[39;00m \u001b[39mnot\u001b[39;00m \u001b[39mNone\u001b[39;00m:\n\u001b[1;32m 949\u001b[0m \u001b[39m# Release the lock early so that multiple threads can perform the call\u001b[39;00m\n\u001b[1;32m 950\u001b[0m \u001b[39m# in parallel.\u001b[39;00m\n\u001b[1;32m 951\u001b[0m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_lock\u001b[39m.\u001b[39mrelease()\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/tensorflow/python/eager/function.py:2956\u001b[0m, in \u001b[0;36mFunction.__call__\u001b[0;34m(self, *args, **kwargs)\u001b[0m\n\u001b[1;32m 2953\u001b[0m \u001b[39mwith\u001b[39;00m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_lock:\n\u001b[1;32m 2954\u001b[0m (graph_function,\n\u001b[1;32m 2955\u001b[0m filtered_flat_args) \u001b[39m=\u001b[39m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_maybe_define_function(args, kwargs)\n\u001b[0;32m-> 2956\u001b[0m \u001b[39mreturn\u001b[39;00m graph_function\u001b[39m.\u001b[39;49m_call_flat(\n\u001b[1;32m 2957\u001b[0m filtered_flat_args, captured_inputs\u001b[39m=\u001b[39;49mgraph_function\u001b[39m.\u001b[39;49mcaptured_inputs)\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/tensorflow/python/eager/function.py:1853\u001b[0m, in \u001b[0;36mConcreteFunction._call_flat\u001b[0;34m(self, args, captured_inputs, cancellation_manager)\u001b[0m\n\u001b[1;32m 1849\u001b[0m possible_gradient_type \u001b[39m=\u001b[39m gradients_util\u001b[39m.\u001b[39mPossibleTapeGradientTypes(args)\n\u001b[1;32m 1850\u001b[0m \u001b[39mif\u001b[39;00m (possible_gradient_type \u001b[39m==\u001b[39m gradients_util\u001b[39m.\u001b[39mPOSSIBLE_GRADIENT_TYPES_NONE\n\u001b[1;32m 1851\u001b[0m \u001b[39mand\u001b[39;00m executing_eagerly):\n\u001b[1;32m 1852\u001b[0m \u001b[39m# No tape is watching; skip to running the function.\u001b[39;00m\n\u001b[0;32m-> 1853\u001b[0m \u001b[39mreturn\u001b[39;00m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_build_call_outputs(\u001b[39mself\u001b[39;49m\u001b[39m.\u001b[39;49m_inference_function\u001b[39m.\u001b[39;49mcall(\n\u001b[1;32m 1854\u001b[0m ctx, args, cancellation_manager\u001b[39m=\u001b[39;49mcancellation_manager))\n\u001b[1;32m 1855\u001b[0m forward_backward \u001b[39m=\u001b[39m \u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_select_forward_and_backward_functions(\n\u001b[1;32m 1856\u001b[0m args,\n\u001b[1;32m 1857\u001b[0m possible_gradient_type,\n\u001b[1;32m 1858\u001b[0m executing_eagerly)\n\u001b[1;32m 1859\u001b[0m forward_function, args_with_tangents \u001b[39m=\u001b[39m forward_backward\u001b[39m.\u001b[39mforward()\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/tensorflow/python/eager/function.py:499\u001b[0m, in \u001b[0;36m_EagerDefinedFunction.call\u001b[0;34m(self, ctx, args, cancellation_manager)\u001b[0m\n\u001b[1;32m 497\u001b[0m \u001b[39mwith\u001b[39;00m _InterpolateFunctionError(\u001b[39mself\u001b[39m):\n\u001b[1;32m 498\u001b[0m \u001b[39mif\u001b[39;00m cancellation_manager \u001b[39mis\u001b[39;00m \u001b[39mNone\u001b[39;00m:\n\u001b[0;32m--> 499\u001b[0m outputs \u001b[39m=\u001b[39m execute\u001b[39m.\u001b[39;49mexecute(\n\u001b[1;32m 500\u001b[0m \u001b[39mstr\u001b[39;49m(\u001b[39mself\u001b[39;49m\u001b[39m.\u001b[39;49msignature\u001b[39m.\u001b[39;49mname),\n\u001b[1;32m 501\u001b[0m num_outputs\u001b[39m=\u001b[39;49m\u001b[39mself\u001b[39;49m\u001b[39m.\u001b[39;49m_num_outputs,\n\u001b[1;32m 502\u001b[0m inputs\u001b[39m=\u001b[39;49margs,\n\u001b[1;32m 503\u001b[0m attrs\u001b[39m=\u001b[39;49mattrs,\n\u001b[1;32m 504\u001b[0m ctx\u001b[39m=\u001b[39;49mctx)\n\u001b[1;32m 505\u001b[0m \u001b[39melse\u001b[39;00m:\n\u001b[1;32m 506\u001b[0m outputs \u001b[39m=\u001b[39m execute\u001b[39m.\u001b[39mexecute_with_cancellation(\n\u001b[1;32m 507\u001b[0m \u001b[39mstr\u001b[39m(\u001b[39mself\u001b[39m\u001b[39m.\u001b[39msignature\u001b[39m.\u001b[39mname),\n\u001b[1;32m 508\u001b[0m num_outputs\u001b[39m=\u001b[39m\u001b[39mself\u001b[39m\u001b[39m.\u001b[39m_num_outputs,\n\u001b[0;32m (...)\u001b[0m\n\u001b[1;32m 511\u001b[0m ctx\u001b[39m=\u001b[39mctx,\n\u001b[1;32m 512\u001b[0m cancellation_manager\u001b[39m=\u001b[39mcancellation_manager)\n",

|

|||

|

|

"File \u001b[0;32m~/.local/lib/python3.10/site-packages/tensorflow/python/eager/execute.py:54\u001b[0m, in \u001b[0;36mquick_execute\u001b[0;34m(op_name, num_outputs, inputs, attrs, ctx, name)\u001b[0m\n\u001b[1;32m 52\u001b[0m \u001b[39mtry\u001b[39;00m:\n\u001b[1;32m 53\u001b[0m ctx\u001b[39m.\u001b[39mensure_initialized()\n\u001b[0;32m---> 54\u001b[0m tensors \u001b[39m=\u001b[39m pywrap_tfe\u001b[39m.\u001b[39;49mTFE_Py_Execute(ctx\u001b[39m.\u001b[39;49m_handle, device_name, op_name,\n\u001b[1;32m 55\u001b[0m inputs, attrs, num_outputs)\n\u001b[1;32m 56\u001b[0m \u001b[39mexcept\u001b[39;00m core\u001b[39m.\u001b[39m_NotOkStatusException \u001b[39mas\u001b[39;00m e:\n\u001b[1;32m 57\u001b[0m \u001b[39mif\u001b[39;00m name \u001b[39mis\u001b[39;00m \u001b[39mnot\u001b[39;00m \u001b[39mNone\u001b[39;00m:\n",

|

|||

|

|

"\u001b[0;31mKeyboardInterrupt\u001b[0m: "

|

|||

|

|

]

|

|||

|

|

}

|

|||

|

|

],

|

|||

|

|

"source": [

|

|||

|

|

"from keras.layers import Input, Dense\n",

|

|||

|

|

"from keras.models import Model\n",

|

|||

|

|

"\n",

|

|||

|

|

"import keras\n",

|

|||

|

|

"from keras.layers import *\n",

|

|||

|

|

"from keras import *\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Dimension de l'entrée\n",

|

|||

|

|

"input_img = Input(shape=(28, 28, 1))\n",

|

|||

|

|

"latent_dim = 5\n",

|

|||

|

|

"\n",

|

|||

|

|

"# Définition d'un encodeur\n",

|

|||

|

|

"x = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(input_img)\n",

|

|||

|

|